Poor data quality in the insurance industry can have significant financial and regulatory impacts, leading to operational inefficiencies, revenue losses, and financial penalties. These affect claim processing, underwriting, and billing, resulting in higher administrative costs, delayed payouts, and customer dissatisfaction. It also hampers fraud detection, leading to losses from illegitimate claims, and limits opportunities for cross-selling and up-selling due to inaccurate customer profiles.

Research from Experian Data Quality shows that poor data quality has a direct impact on the bottom line of 88% of companies, with an average company losing 12% of its revenue. By leveraging accurate and reliable data, insurers can optimize their operations, deliver better products and services, and ultimately provide enhanced value to their customers.

On the regulatory front, poor data quality can result in non-compliance with data protection laws (e.g., GDPR, CCPA) and errors in mandatory reporting, attracting penalties and reputational harm. Persistent data issues may trigger audits, stricter regulatory oversight, or even solvency risks due to flawed risk assessments. These challenges can compromise compliance with stress testing and risk management requirements, undermining confidence in the organization’s stability. Here are seven common data quality issues and remedies in the insurance industry.

Common Data Quality Issues and Remedies

1. Missing Data

Missing data is a prevalent challenge in the insurance industry, often stemming from customers leaving forms partially filled out or key fields, such as driving history or date of birth, being omitted in policy applications. Additionally, errors during data migration from legacy systems to modern platforms can exacerbate the problem, leading to gaps in critical information. This affects underwriting accuracy, customer service, and compliance with regulatory requirements.

To address these issues, insurers can implement validation rules to ensure digital forms are completed before submission, reducing errors at the source. Integrating APIs to auto-populate data from trusted databases can streamline data collection while maintaining accuracy. Furthermore, workflows should be established to identify and flag incomplete entries for timely follow-up, ensuring data integrity and reducing the downstream impact on business processes.

2. Duplicate Data

Data duplication is a significant data quality issue, often arising when customers provide the same information through multiple channels, such as online portals or in-person interactions. Additionally, mergers and acquisitions can introduce duplicate records if proper deduplication processes are not in place. These redundancies can lead to inefficiencies, confusion in customer service, and potential errors in policy management or claims processing.

To address this, insurers can deploy data deduplication tools that automatically identify and merge duplicate records. Implementing unique customer identifiers, such as policy numbers or national IDs, ensures consistency across multiple systems and prevents duplication. Establishing robust master data management (MDM) practices further supports the creation of a single, accurate source of truth for customer data, improving operational efficiency and decision-making.

3. Inaccurate Data

Inaccurate data is often caused by manual data entry errors, data loss during system migrations, or the absence of real-time validation checks. These inaccuracies can lead to incorrect risk assessments, policy errors, and a negative customer experience, impacting operational efficiency and regulatory compliance.

To mitigate these challenges, insurers can adopt real-time data validation checks, such as verifying postal codes and other critical fields at entry. Regular data cleansing processes help identify and rectify inaccuracies in existing records. Periodic audits and reconciliation ensure data consistency and accuracy across systems, enabling more reliable decision-making and improving overall data quality.

4. Inconsistent Data Formats

Inconsistent data formats arise from data being entered across multiple systems with varying conventions, such as differing date formats or address standards. The absence of standardized templates for data collection exacerbates this issue, leading to difficulties in data integration, reporting, and analysis.

To address this, insurers should establish enterprise-wide data standards for formats and conventions, ensuring uniformity across all systems. Leveraging ETL (Extract, Transform, Load) tools can harmonize data during integration processes, resolving format discrepancies. Additionally, educating staff and agents on consistent data entry practices reinforces adherence to these standards, improving data quality and usability.

5. Data Silos

Data silos are often caused by the use of disparate systems for functions like underwriting, claims processing, and customer relationship management (CRM). These isolated systems, compounded by a lack of integration between on-premises and cloud platforms, hinder data accessibility and create inefficiencies, such as redundant processes and incomplete customer insights.

To overcome this challenge, insurers can implement centralized data warehouses or data lakes, consolidating data from various systems into a unified repository. APIs and middleware solutions can enable interoperability, allowing seamless communication between different platforms. Promoting cross-department collaboration and establishing data-sharing policies further break down silos, fostering a more integrated and efficient data ecosystem.

6. Unstructured Data

Unstructured data such as claims notes, emails, and scanned PDF documents are often stored in free-text or image formats, making them challenging to analyze and integrate with structured datasets. The lack of tools to process this data limits its usability, affecting areas like claims processing, fraud detection, and customer service.

To address this, insurers can implement AI-based natural language processing (NLP) tools to extract actionable insights from unstructured text. Optical Character Recognition (OCR) technology can digitize handwritten or scanned documents, converting them into machine-readable formats. Encouraging structured templates for data submission can further reduce reliance on unstructured data, improving accessibility and accuracy across workflows.

7. Manipulated or Fraudulent Data

Manipulated or fraudulent data poses significant financial challenges in the insurance industry, with customers sometimes providing false information to secure lower premiums or inflating claims to exploit the system. Such practices not only lead to financial losses but also undermine trust and increase operational costs for insurers.

To combat this issue, insurers can deploy fraud detection systems powered by machine learning to identify patterns and anomalies indicative of fraudulent behaviour. Cross-referencing customer and claims data with external databases, such as motor vehicle records, adds an additional layer of verification. For high-risk cases, conducting enhanced due diligence ensures a thorough review, reducing the likelihood of fraud slipping through the cracks.

Final Thoughts

Prioritizing data quality is essential not only for avoiding financial pitfalls but also for building trust with customers, investors, and regulatory bodies in an increasingly data-driven industry. By investing in robust data governance, regular data quality checks, advanced analytics, and regular audits, insurers can mitigate these risks, enhance operational efficiency, and maintain compliance with regulatory requirements.

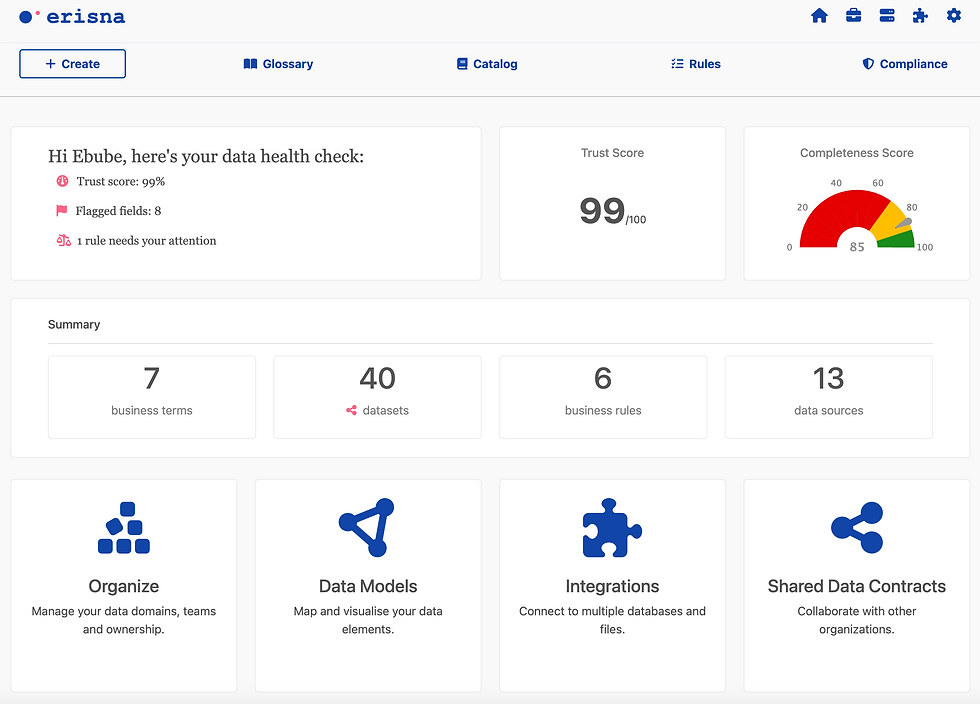

Erisna is an intuitive data governance and quality platform that enables organizations to better understand their data assets, improve data quality and speed up audit readiness. With tools for data cataloguing, profiling, validation and cleansing that can be understood across the business, Erisna enables data teams to drive efficiency and cost savings when working with data.